An advertisement in a recent issue of Popular Computing magazine describes a new software package, Managing Your Money, in which, it is claimed, the financial expertise of best-selling economics writer Andrew Tobias has been stripped down, streamlined, and translated into the language that an Apple or IBM microcomputer understands.

“Managing Your Money is like having Andrew Tobias, author of The Only Investment Guide You’ll Ever Need and The Invisible Bankers, at my side whenever I need his help…” says a satisfied customer, who is pictured with Tobias standing reassuringly nearby. By slipping a floppy disk into the drive of a home computer, anyone can have Tobias’s assistance in analyzing investments, evaluating life insurance portfolios, planning tax strategies–for $199.95.

In the competitive market of personal computer software, it is difficult to make new products stand out. Hundreds of pages of advertisements fill the dozens of computer magazines displayed at almost every newsstand in the country. The packaging of Andrew Tobias’s brain is notable not so much as a technological breakthrough but as an effective new advertising gimmick.

Personal computers don’t yet have memories so capacious or processors so adept that they come close to matching human intelligence. But that may change. In the laboratories of universities such as Stanford, Carnegie-Mellon, Yale, and the Massachusetts Institute of Technology, scientists in the field of artificial intelligence are developing programs so complex and surprising in their performance that one philosopher, Daniel C. Dennett of Tufts University, argues that it only makes sense to attribute to them desires and goals, what he calls intentional behavior.

Artificial-intelligence researchers are gratified when an outsider from the field of philosophy takes note of their work, for they are convinced that we are steadily progressing toward a day when machines don’t simply calculate but reason. In attempting to achieve this goal, computer scientists are providing philosophers and psychologists with new perspectives on the nature of intelligence, both human and artificial.

“With their methodological attention to detail, their tirelessness, their immunity to boredom, and their very high speed, all coupled now with reasoning power and information, machines are beginning to produce knowledge, often faster and better–‘smarter’–than the humans who taught them,” wrote artificial-intelligence researcher Edward Feigenbaum and science writer Pamela McCorduck in their book The Fifth Generation: Artificial Intelligence and Japan’s Challenge to the World. (As in most areas of technology, it is feared that the Japanese may soon corner the market).

“…[I]n all humility, we really must ask: How smart are the humans [who have] taught these machines?…We should give ourselves credit for having the intelligence to recognize our limitations and for inventing a technology to compensate for them.”

In the last few years, the best examples of this new generation of software have become legends.

A program called Caduceus, which is being developed at the University of Pittsburgh, is now estimated to contain 80 percent of the knowledge in the field of internal medicine. Of course, the same could be said of a good medical school library. But Caduceus’s knowledge isn’t static like that trapped in books; the system applies rules to its vast domain of facts and makes sophisticated medical judgments. Already it can solve most of the diagnostic problems presented each month in the New England Journal of Medicine, a feat that many human internists would find difficult to match.

Prospector, a program developed at SRI International, a private research corporation in Menlo Park, California, is so adept at the arcane art of geological exploration that it has discovered a major copper deposit in British Columbia and a molybdenum deposit in the state of Washington. Both mineral fields, which have been valued at several million dollars, had been overlooked by human specialists.

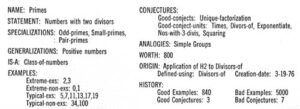

Systems like Caduceus and Prospector are designed by a new corps of professionals called knowledge engineers, who systematically interview experts, helping them translate what they know into a complex web of rules and concepts that can be captured in a computer program. When still in the human head, expertise is often vague and amorphous, a loosely knit system of hunches and intuitions that have been compiled from decades of experience, something too subtle and complex to lay out in an introductory college text and teach in classes.

When the knowledge engineers are done, expertise becomes so well-defined that it can be mapped by a network of lines and boxes. To solve a problem, a computer searches through the labyrinth of rules and information, deciding at each fork which trails to explore, backing up and trying others when it reaches dead ends.

Humans can still find satisfaction in knowing that the rules must be defined and programmed by people. But current research suggests that expert systems might be designed to discover rules on their own. A program called Induce, which gleans general principles from large bodies of seemingly chaotic facts, learned how to recognize a dozen different types of soy bean diseases after being presented with hundreds of examples. After designing WASP, a program that parses English sentences, MIT researchers began working on Meta-WASP, which can use samples of sentences to learn rules of grammar. Researchers at IBM are working on an expert system that would replace human knowledge engineers.

As the programs become more refined and the networks of paths and boxes grow more complex, it becomes increasingly difficult to predict what a computer will decide. In one second, it can process between 10 and 100 thousand logical inferences, or syllogisms. In 1981, the Japanese government announced that it would provide almost a half billion dollars in seed money over the next decade to produce machines that will be able to draw as many as 1 billion logical inferences per second.

If that goal is achieved, a computer could make, in one second, a decision so complex that it would take a human 30 years to unravel it, assuming that he or she could think constantly at the superhuman speed of one syllogism per second. Given 10 seconds to ponder a problem, a computer’s decision would have to be taken on faith. By human standards it would be unfathomable.

When computers can have thoughts that would take more than a human lifetime to understand, it is tempting to consider them smarter than their makers.

Automatic Checkers

One of the first computer scientists to be outwitted by his own program was Arthur Samuel, who, in the late 1940s, began developing an automatic checkers player.

Like human players, game-playing programs must search a maze of possibilities, analyzing potential moves, one after the other, judging how the opponent would be likely to respond, how to respond to the response, how to respond to the response to the response, et cetera. Like a human, the computer must do this in a finite amount of time, guided by the knowledge of which moves are probably worth exploring and which can be ignored.

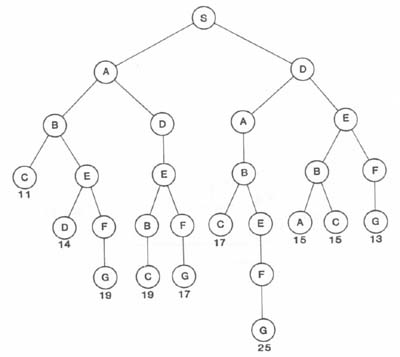

To picture the process, computer scientists draw what they call game trees. At the root of a tree is a box representing how the board looks when the game begins. The possible moves the first player can make are symbolized by lines leading to more boxes, which represent the resulting changes in the board configuration. From each of these boxes come branches delineating the responses the opponents can make.

If a creature could generate a tree for an entire game and hold it in its head, it always would be able to win or tie. For a simple game like tic-tac-toe this is possible. But by the time the trees for chess or checkers are several levels deep they are intractable. Samuel estimated that the number of centuries it would take to dissect a checkers game would be represented by a 1 with 21 zeroes after it.

To blaze likely trails through the wilderness of lines and boxes–the search space, as scientists call it–people and computers use hunches, intuitions, and rules they’ve learned from experience. Such expertise–like that of doctors and geologists–doesn’t guarantee success or victory. But it can suggest good ways to proceed in complex situations.

Guided by Samuel’s checker-playing program, a computer analyzed a move by growing a tree several levels deep, then used formulas to judge whether, if the line of play were pursued that far, the computer or the human would be winning. After trying all its options, it chose the best one, storing information on the others for future reference.

Samuel also devised a way for the program to benefit from the experience of human checkers masters, whose advice on what to do in 250,000 circumstances was coded onto magnetic tape. By comparing its decisions with the experts, the program constantly improved itself.

By 1961 it was a better player than Samuel. A year later, after losing to the program, Robert Nealey, a nationally known checkers player, said that he had not known such stiff competition since 1954–the last time he had lost a game to a human player.

Similar compliments have been paid to Chess 4.7, a program that can easily beat most of the world’s chess players. International Chess Master David Levy once challenged and beat the program, but after the match he told computer scientist Christopher Evans that the machine’s moves were so good that he had trouble believing it wasn’t human.

As successful as these programs are, they still rely heavily on the computer’s ability to quickly explore a vast number of possible moves, even ones so obviously unpromising that a human would immediately pass them by. The fact that humans, with a fraction of a computer’s searching speed, can still win games demonstrates that there is something going on in a grand master’s mind that can’t yet be programmed.

No matter how impressive programs become, many of us will hesitate to grant intelligence to computers until we can converse with them. In the late 1960s, the press’s attention was captured by Eliza (named for Pygmalion’s Eliza Doolittle), a complex piece of software that was written to duplicate the questioning methods of a psychoanalyst. Although communication was carried out entirely by typing on a computer terminal, some of Eliza’s “patients” became so attached to their cybernetic mentor that its creator, computer scientist Joseph Weizenbaum, was appalled. In his book Computer Power and Human Reason he renounced artificial intelligence, condemning it in terms reminiscent of those used by foes of recombinant DNA research.

FROM PATRICK HENRY WINSTON, ARTIFICIAL INTELLIGENCE, SECOND EDITION, (READING, MASSACHUSETTS: ADDISON-WESLEY, 1984.)

Portions of Eliza’s most famous conversation went like this (the computer’s responses are in capital letters) :

IN WHAT WAY?

They’re always bugging us about something or other.CAN YOU THINK OF A SPECIFIC EXAMPLE?

Well, my boyfriend made me come here.YOUR BOYFRIEND MADE YOU COME HERE–THAT’S INTERESTING.

He says I’m depressed much ofI AM SORRY TO HEAR YOU ARE DEPRESSED.

I need some help, that much seems certain.WHAT WOULD IT MEAN TO YOU IF YOU GOT SOME HELP?

Perhaps I could learn to get along with my mother.TELL ME MORE ABOUT YOUR FAMILY.

You are like my father in some ways.WHAT RESEMBLANCE DO YOU SEE?

My father is afraid ofREALLY, EVERYBODY?

My father is very fearful.WHAT ELSE COMES TO MIND WHEN YOU THINK OF YOUR FATHER?

Bullies.DOES THAT HAVE ANYTHING TO DO WITH THE FACT THAT YOUR BOYFRIEND MADE YOU COME HERE?

The conversation sounds impressive, especially the final exchange, until one realizes that it’s done largely with four simple tricks.

Eliza scans each input statement for a small list of words that trigger seemingly appropriate canned responses. The word “depressed” elicits a preprogrammed expression of condolences; the word “mother” extracts a comment about families. Sometimes this device can be fairly subtle. When Eliza sees the word “like” in one sentence, it produces the response, “What resemblance do you see?”

The patient’s own comments are rearranged and turned into responses. At the beginning of the conversation, if the patient had said, “Well, my alpaca has laryngitis,” Eliza would have responded; “Your alpaca has laryngitis–that’s interesting.” For that matter, if the patient had said “My wxyz has az37,” Eliza would have been unfazed, mindlessly recycling the nonsense.

When an input statement contains no trigger words, or doesn’t match one of the limited templates Eliza uses to twist statements into replies, the program produces a noncommittal comment, like “Can you think of a specific example?” Each time it needs one of these non-replies, it takes it from a queue, repeating the list when the supply is exhausted. If the conversation had continued much longer the repetition would have become obvious and Eliza would have seemed as prescient as a washing machine.

Early in the dialog, Eliza stores one of the patient’s comments–in this case, “My boyfriend made me come here,” throwing it back many lines later, generating a feeling of surprise. The fact that, in this case, the comment seems particularly appropriate is coincidental. If the patient had said “cantaloupes” instead of “bullies,” Eliza’s reply would have been the same.

FROM PATRICK HENRY WINSTON, ARTIFICIAL INTELLIGENCE, SECOND EDITION, (READING, MASSACHUSETTS: ADDISON-WESLEY, 1984.)

Recently a California company, Artificial Intelligence Research Group, has converted Eliza to run on a personal computer. After playing with the program for ten minutes or so, Eliza’s techniques became transparent. Most of the conversations are unreadably dull. Eliza so rarely makes sense that the conversation quoted above has been used dozens of times over the years, every time the program is described in a book or article.

A more typical exchange follows:

I am trying to judge how intelligent you are.

IS IT BECAUSE YOU ARE TRYING TO JUDGE HOW INTELLIGENT I ARE (sic) THAT YOU CAME TO ME?I didn’t come to you. I ordered you from a company in California.

PERHAPS IN YOUR FANTASY WE DIDN’T COME TO EACH OTHER.It wasn’t a fantasy. I charged you on my Visa card.

YOUR VISA CARD–THAT’S INTERESTING.You cost: $49.95.

WE WERE DISCUSSING YOU–NOT ME.I consider myself priceless.

WHY DO YOU NEED TO TELL ME YOU CONSIDER YOURSELF PRICELESS?I am proud of my human ability to reason.

WHY DO YOU SAY YOUR HUMAN ABILITY TO REASON?Knowing how Eliza works, the last reply shouldn’t be ominous, but it is. The program simply pulled “Why do you say” from its list of canned sentence patterns, changed the word “my” to “your” and repeated the last four words of the preceding sentence. It did not intend to imply any stress on the word “human.” It does not understand irony.

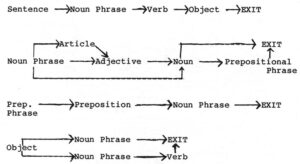

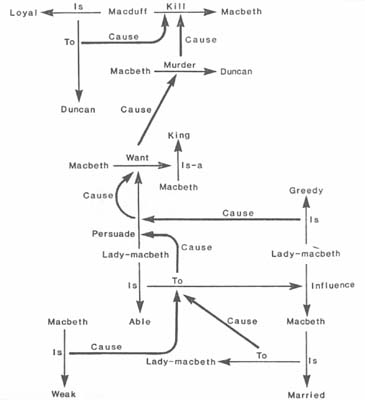

To be truly conversational, a program would have to understand what its partner was saying. Words would have to be more to it than opaque units to be manipulated into patterns that resemble talk. At Yale University, artificial-intelligence researcher Roger Schank and his colleagues have been working on methods to instill in computers the elusive quality that humans call meaning. The resulting programs can be said–in a rudimentary way–to understand what they are told.

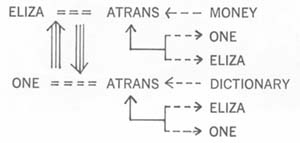

According to Schank’s theory, meaning can be captured by constructing a network showing how the objects and actors in a sentence are interrelated. In the system, a simplified representation of “Eliza bought a dictionary” would look like this:

Given the sentence, the program makes explicit what is implied: that buying involves the transfer of money; that an unidentified person must have been involved in the transaction.

In the diagram, ATRANS refers to the transfer of an abstract relationship, in this case ownership. According to Schank, any action can be broken into a small number of primitive acts, such as PTRANS, for the transfer of a physical object; MTRANS, for the transfer of mental information; PROPEL; and INGEST. In addition to actions, there are primitive states, both mental and physical.

“John MTRANS (Bill BE MENTAL-STATE(5)) to Mary” means that John told Mary that Bill was happy (i.e., that he had a mental state of 5).

Using such methods, a program called Margie (Meaning Analysis, Response Generation. and Inference on English), can paraphrase simple English sentences, indicating that it has, in a sense, understood what it has been told.

By giving programs a knowledge base in which information about everyday situations and human behavior has been broken into semantic primitives, Yale researchers have shown how to make computers understand simple stories. After being told that John went to a restaurant, sat down, got mad, and left, Sam (Script Applier Mechanism) produced this paraphrase:

John was hungry. He decided to go to a restaurant. He went to one. He sat down in a chair. A waiter did not go to the table. John became upset. He decided he was going to leave the restaurant. He left.

Sam was able to read between the lines of the original story, filling in narrative gaps by making common-sense assumptions. It was able to do so because it had been programmed with a restaurant script, describing what generally happens when one goes out to eat.

If Sam had been told that John had left the restaurant with a waitress, the story would have been harder to paraphrase. Was she his girlfriend, and was he angry because she flirted with the cook? Or was he so enraged at being ignored that he took a hostage? To understand the situation the computer would need stereotypical information about human relationships, and, perhaps, a hostage script. To really understand John sitting, Sam would need a memory box with information about chairs.

Theoretically the maze of lines and boxes representing the simple knowledge of life could be programmed. As the number of boxes and the density of the connections increased, perhaps the program would reach a critical mass, making inferences of such richness and subtlety that the quality we call intelligence would seem to arise. Language can be thought of as an enormous network of lines and boxes, a search space. A conversational computer would have to be able to feel its way through this most complex of labyrinths. Such an accomplishment remains no more than a thought experiment, like Einstein’s musings about what it would be like to ride on a beam of light.

If a machine so complex is realized, the debate over whether or not it is thinking will only have begun. On one side will be the reductionists, who believe that intelligence can be reduced to a complex orchestration of a myriad of small operations. On the other side will be the holists, who are convinced that thought consists of an inexplicable essence, an elan vital that can never be synthesized.

For all its promises of practical applications, artificial intelligence is most interesting as an exercise in applied philosophy. By divorcing intelligence from its biological context, perhaps we’ll come to better understand the vistas and limits of reason.

©1984 George Johnson

George Johnson, a freelance writer, is reporting on the quest to build computers smarter than humans.